The 2025 AI & Data Leadership Executive Survey, is an industry benchmark that surveys Fortune 1000 and global business leaders. This year, 97.6% of survey respondents identified themselves as C-suite executives or equivalents within their organization.

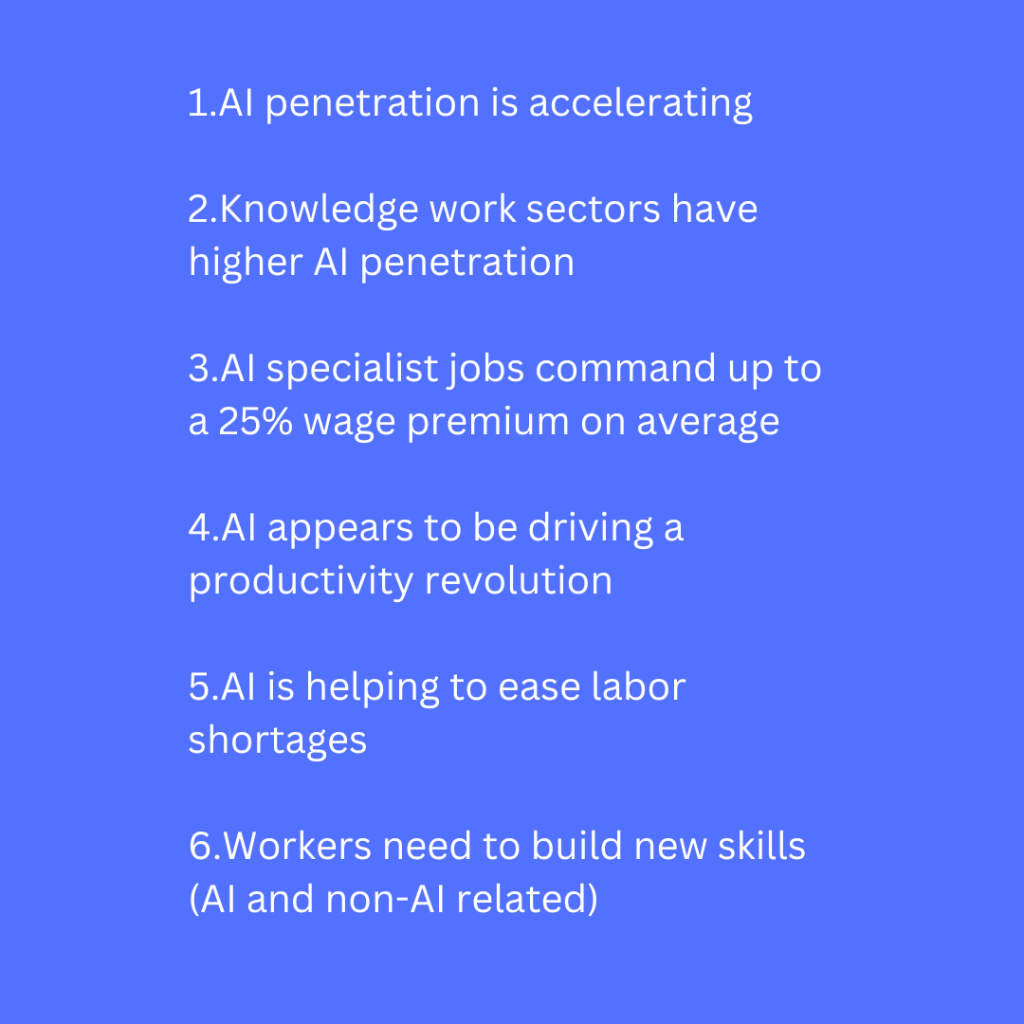

What is the state of Data and AI according to these leaders? There are 6 main takeaways

1- Corporate investments in AI and data are growing. The rapid emergence of AI/Generative AI, is fueling increased organizational investment in AI & data, with data & AI seen as a top organizational priority by a growing percentage of organizations

- 98% – Companies are increasing investment in data and AI

- 91% – Investments in Data & AI are a Top Organizational Priority

- 94% – Interest in AI is Leading to a Greater Focus on Data

2- Organizations are reporting business value from their AI investments. AI initiatives are at an early stage for most companies, but progress is accelerating, with more initiatives moving into production as firms are beginning to deliver measurable business value.

- Data and AI Investments:

- 18% – High Degree of Measurable Business Value

- 28% – Significant and Rapidly Growing Level of Business Value

- 32% – Modest but Increasing Amount of Incremental Business Value

- Generative AI Implementation Efforts

- 24% – Implemented in Production At Scale

- 47% – Early Stage: Implemented in Limited Production

- 29% – Early Stage: Experimentation, Testing, Planning & Design

- Primary Business Value generated by AI/Gen AI

- 58% – Exponential productivity gains and Efficiency

- 16% – Liberate Knowledge Workers from Mundane Tasks

- 17% – Improve Customer Service and Experience

- 10% – Deliver Business Growth

3- Transformation due to AI will be gradual for most organizations. A minority of organizations claim to be data/AI driven or having established a data/AI business culture., and most organizations continue to struggle with adoption and transformation, with cultural challenges noted as the greatest obstacle to progress.

- 92% – The primary barrier to establishing data- and AI-driven cultures is people and organization change

4- Organizations are focusing on responsible AI, safeguards, and guardrails. Responsible AI is an increasing priority for most organizations, as they focus on establishing safeguards and guardrails to ensure responsible AI utilization. Threats of misinformation and disinformation, as well as ethical bias, are ongoing concerns, as is the recognition that more AI talent is needed, and corporate boards require greater education on AI.

- Investment in Responsible AI.

- 74% – Investment in Responsible AI is a Top Corporate Priority

- 98% – Responsible AI Safeguards and Guardrails for Governing AI Must Be In Place

- 78% – Responsible AI Safeguards and Guardrails for Governing AI Are In Place Today

- 57% – Talent is in Place to Ensure Responsibly AI Implementation

- Primary business threat by AI

- 53% – Spread of Misinformation or Disinformation

- 20% – Ethical Bias

- 5% – Job Loss and Job Displacement

5- Organizations are hiring chief AI officers as AI and data leadership roles evolve. Data & AI leadership is in high demand, with Chief Data Officers (CDO/CDAO) and Chief Artificial Intelligence Officers (CAIO) being appointed. Additionally, more data & AI executives are focusing on delivering business value through growth and innovation, and reporting to business leaders.

- Data & AI Leadership Roles and Responsibilities

- 84% – Chief Data Officer (CDO/CDAO) Has Been Appointed

- 33% – Chief AI Officer (CAIO) Has Been Appointed

- Primary Focus of Data & AI Leadership (CDO/CDAO/CAIO)

- 80% – Offense -> Growth | Innovation | Transformation

- 20% – Defense -> Risk Management | Regulatory | Compliance

- Data & AI Leadership Reporting Structures/Reporting to

- 36% – Business Leadership | CEO | President | COO

- 47% – Technology Leadership | CIO | CTO | Head of Tech & Ops

- 11% – Transformation Leadership | Chief Digital Officer

6- AI is likely to be the most transformational technology in a generation. Organizations strongly believe in the transformational impact of AI, foreseeing significant opportunity for productivity and efficiency gains, with the potential benefits clearly outweighing the potential risks.

- Forecasting an AI Future

- 89% – AI is Likely to be the Most Transformational Technology in a Generation

- 61% – Generative AI Will be the Most Transformative Form of AI

- 97% – Overall Impact of AI will be Beneficial

In short, the future is bright for organizations investing in AI with the right Data and AI skills, talent, and leadership in place, and focus on Responsible AI, safeguards and guardrails.

You must be logged in to post a comment.